Neon Genesis Evangelion as a view on the Singularity

Throughout these pages, and my fanac elsewhere, I tend to assume some basic level of familiarity with the concept of the Technological Singularity; this page is intended as a beginner's guide to the concept, and a starting point to learn more, as well as an essay on the Singularitarian aspects of NGE. There is a lot of jargon, but I will try to explain as I go along. If all else fails there are useful Lexicon and concept introduction sites.

What is the Singularity?

Progress is getting faster. Moore's Law (component density on silicon doubles in less than two years) has held for forty years and more than a millionfold improvement. I'm probably wearing more computing power than the average computer when I was born — and those filled rooms; my car probably contains more computing power than most nations had, and my house certainly does. Music that came on brittle, warp-prone 12" vinyl disks containing under 25 minutes a side, now comes for hours in digital purity, in a box the size of a pack of cards. [UPDATE: Since this page was written in early summer '05, we have had the iPod mini and nano models, which are getting to the point where the size of the battery is the limiting factor.]

At its simplest, the weakest form of Singularity is what happens if this increasing rate of improvement keeps going on for a few more decades; a time when things have changed so much that we cannot imagine what will then happen, save that change will keep on happening. (Arguably, from the point of view of someone from even 2-300 years ago, we are already on the far side of such a Singularity).

What form might the Singularity take?

Simple answer: we don't — we can't — know, roughly by definition. But we can take some guesses.

This is where we move to the boundary between several disciplines — rigorous philosophical and technological speculation, applied theology, and pure, and SF. Science fiction provides a place where the outrageous speculations can be presented in digestible form and then feed back into the thinking of others. This is not new — SF has always had a mystical streak (when it is not concerning itself with being an “if this goes on” satire on current social trends, or providing a faraway place for rebadged historical adventures), that concerns itself with the forward looking view on the Human Condition, and the destiny of the species. This has tended to feed back into the social groups that read SF, and inform their thinking, their serious speculation, and their actual works in the real world. This included “the Dream”, the mystical view of spaceflight as the next step after the emergence of life onto the land, especially noticeable in Arthur C. Clarke's early work, and which informed the ethos of many NASA engineers during the Apollo days.

Today, the obvious technologies are ones that are cheaper and more human-scale — computers and biotechnology.

The Technological Singularity and the issue of human destiny — what comes after humanity as we know it — really started to propagate as a meme following its emergence in an academically respectable form in an essay written in 1993 by Vernor Vinge, a computer scientist and SF writer with an interest in the transhuman.

Abstract

Within thirty years, we will have the technological means to create superhuman intelligence. Shortly after, the human era will be ended.

Is such progress avoidable? If not to be avoided, can events be guided so that we may survive? These questions are investigated. Some possible answers (and some further dangers) are presented.

though it was prefigured in more abstract form by Vinge's own SF-detective novel Marooned in RealTime and even earlier in Clarke's Childhood's End, amongst others; and the inference IF AI THEN super-human AI was obvious enough that I recall articulating it in discussions in student days, 15 years before Vinge's essay, though in the interim the most obvious change was that internetworked computational power became cheap and ubiquitous enough for the raw materials to be obvious.

On the other front, compared with the development of superhuman intelligences from scratch, there is the idea of amplifying existing natural intelligence. This could be by simple prosthetics such as Google to direct upgrades to processing power through biotechnological means.

So the Singularity is inevitable, and we may only have fine control over it?

No — we may manage to destroy the technological infrastructure (war, ecological catastrophe, plague — the usual suspects, really); but if we don't…

And we already have overt groups like the Singularity Institute who are trying to turn the Singularity into a self-fulfilling prophecy, and to make it manifest in the least worst possible form, where the transhuman AI created is “Friendly”, i.e. humane by choice.

Doesn't this start to sound a lot like the attempts to control the form of the Third Impact, an event seen as inevitable by NERV and SEELE alike in NGE?

Whispers of the Singularity

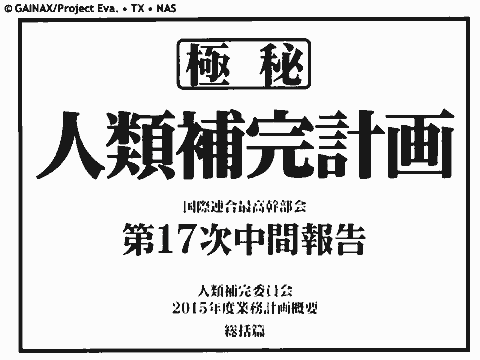

Top Secret

Human Instrumentality Project

International Alliance Supreme Executive Council

17th Interim Report

Human Instrumentality Committee

2015 Business Plan Outline

Summary

This, in episode 2 of the show (though the ADV subtitling translated it as Human Enhancement Project; and also present in the intro montage) was enough to make me sit up and take notice, even before Third Impact was mentioned. Were they really trying to build something super-human? Was this more than just a coming-of-age story with giant robots?

Surviving the Superhuman

As the possibility of the Singularity taking place within our lifetimes if it happens at all is high, we need to consider what survival of our current human selves might mean. The spectrum is large, from unaltered humans being kept as pets by some fraction of the godlike intellects that we have spawned, to actually becoming superhuman (or part of one) ourselves.

In the world of strong AI, where machine intelligence is equivalent to human, it becomes reasonable to consider the person as program; just needing to be installed on a suitable brain emulator — a process known as uploading (if the emulator is at least as fast as the organic brain, otherwise it's downloading).

There are a number of deep philosophical issues here, such as whether the persona running on the new hardware is the same person or not — but then are you the same person you were half a lifetime ago? Consciousness appears to be (as per Dennett) built up long (as much as seconds) after the fact, so if the program feels like you, is that any different to the you you would be tomorrow in any case? Of course in the limiting case where the upload takes place one neuron at a time, incrementally replacing your brain with an emulator, that is no different to the normal process whereby our brains change with time.

Then, once installed on a substrate in which it can perform direct self-modification, the human consciousness could itself bootstrap to the superhuman; though in doing so it would inevitably have to cut away some of the dross that has built up in a randomly evolved mentality.

Actually, this is something that we might want to do even without the buildup to super-humanity — hedonic engineering, to remove the burden of suffering, for example; and without the negatives inferred from a protective sour grapes mindset in Huxley's Brave New World.

OK, so we have a state where we can transfer a living human consciousness to another hardware platform — but with unlimited superhuman brains working on the problem what more can we do?

As medical technology has advanced, we have been able to revive people who in an earlier day would have been given up for dead, primarily by restarting their hearts. And those people have been indisinguishable from the way they were before, assuming the revival was prompt enough that the brain was not organically damaged by oxygen lack. Degenerative brain diseases aside, it seems likely that the neuronal replacement model canbe applied to those who are somewhat dead, just so long as it is possible to work backwards from the current state to the active person. The limit at which such retrodiction can be performed, due to the nature of decay and entropy, is referred to as information theoretical death.

For fictional treatments of uploads (in the absence of superhuman intelligences) Greg Egan is your man (his novels Permutation City, and Schild's Ladder, in particular — though beware, the latter is Hard SF in the sense of hard==difficult, being based on some deep quantum gravity research he's been involved in).

What sort of superhuman?

God — or at least the Deus Futurus ex Machina — alone knows.

One benign situation would be where the superhuman intelligence acts as neutral and fair arbiter for all the human intelligences within its scope; the so-called SysOp scenario, where the superintelligence serves as an “operating system” for all the matter in the Solar System or beyond, a sort of living peace treaty with the power to enforce itself, ready to do whatever the “inhabitants” ask so long as it does not infringe on any other's rights. In its iteractions with merely human intelligences, it would need some form of interlocutor — a rôle akin to bodhisattva, one who could ascend, but pauses to assist others. In the jargon, this sort of entity is a Transition Guide, the bridge between modern-day humanity and the other side of a Friendly Singularity.

Now it is likely in scenarios where the not-yet-superhuman AI achieves superhumanity by feedback to improve its own operation (recursive self-improvement), that there will be a hard takeoff, the scenario in which a mind makes the transition from prehuman or human-equivalent intelligence to strong transhumanity or superintelligence over the course of days or even just hours.

Is this all starting to sound familiar?

Third Impact — a hard-takeoff SysOp Event

Bootstrapped from the nigh-Transcendent metabiotechnology of the precursor civilisation that created the Seeds of Life, Adam and Lilith, the events we see in EoE seem to fit this model.

At its core, Giant Naked Rei, being a fusion of Adam and Lilith, Rei, and whatever parts of Ikari Yui were incorporated into her, is the suddenly emergent Transhuman Intelligence. The hordes of lesser Reis, manifesting as the most beloved of each of the characters (in most cases) are the transition guides.

In this case, the upload is not a voluntary thing (the scenario is not 100% Friendly by necessity); and it is no different from the early involutary subsumption by superintelligences in Greg Bear's Blood Music.

There are, of course, some differences from the true SysOp scenario, in that there is a privileged human at the controls, and one who does not meet the definition of Friendliness ( “Nobody wants me. So, everybody just die.”), though GNR as quasi-SysOp questions this command repeatedly, he has an overriding say in what transpires, including commanding the system to crash and burn, regardless of any other mentalities.

Further Reading

Most of the links on this page will lead you into a net of primary sources on the subject of the transhuman, and improving the human condition. Two other sites I've not directly linked to that will be of relevance are